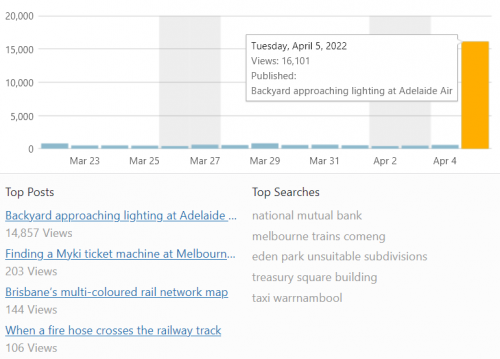

The story starts when I published a piece on the backyard approaching lighting at Adelaide Airport to my blog.

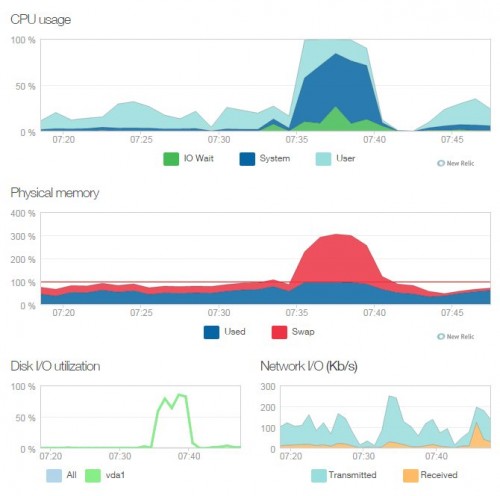

Later that day I noticed that my website was now running rather sluggishly, so checked the logs – an explosion in traffic.

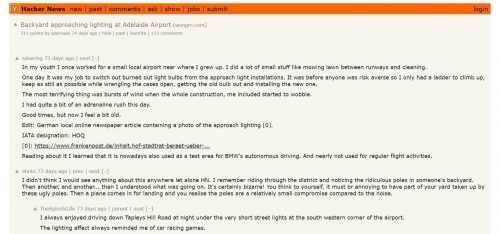

And the reason – someone over at Hacker News had shared a link to it, and it was getting heaps of traffic.

I’m an occasional visitor to the site, which is a social news website like Reddit, but with a focus on computer science and entrepreneurship – so I was kinda surprised to see it getting a run over there.

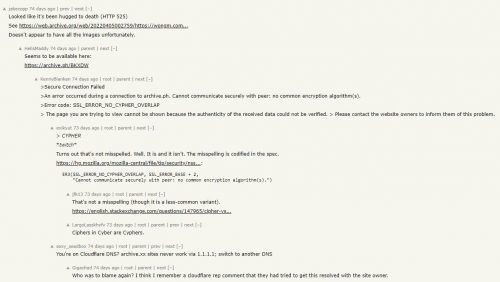

Of course, given the tech background of the readers, discussion soon went off onto the ‘hug of death‘ all of the traffic was giving my poor web server.

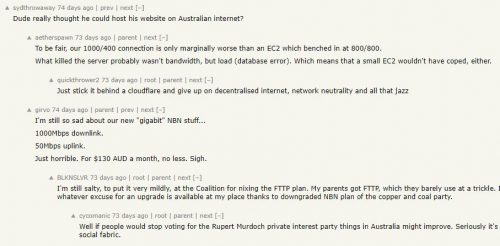

As well as jokes about the poor state of Australia’s internet.

And fixing it?

I run my websites on a virtual private server (VPS) that I manage myself, so unfortunately for me I was on my to manage the flood of traffic.

My initial solution was the simplest, but also costly – just scale up my server to one with twice the CPU cores and twice the RAM.

That made my site more responsive, but I didn’t want to double my monthly web hosting costs, so it was time to get smart. These symptoms sounded exactly like my server.

If your VPS gets overloaded, and reaches the maximum number of clients it can serve at once, it will serve those and other users will simply get a quick failure. They can then reload the page and maybe have greater success on the second try.

This sounds bad, but believe me, it’s much better to have these connections close quickly but leave the server in a healthy state rather than hanging open for an eternity. Surprisingly you can get better performance from a server that has fewer child processes but responds faster than it is to have a server with more child processes that it is unable to handle.

I had to dig into the settings of Apache to optimise them for the resources my server had available.

Most operating systems’ default Apache configurations are not well suited for smaller servers – 25 child processes or more is common. If each of your Apache child processes uses 120MB of RAM, then your VPS would need 3GB just for Apache.

One visitor’s web browser may request 4 items from the website at once, so with only 7 or 8 people trying to load a page at the same time your cloud server can become overloaded. This causes the web page to hang in a constantly loading state for what seems like an eternity.

It is often the case that the server will keep these dead Apache processes active, attempting to serve content long after the user gave up, which reduces the number of processes available to serve users and reduces the amount of system RAM available. This causes what is commonly known as a downward spiral that ends in a bad experience for both you and your site’s visitors.

What you should do is figure out how much RAM your application needs, and then figure out how much is left, and allocate most of that to Apache.

I used the handy apache2buddy tool to analyse the RAM usage on my server, and calculate the maximum number of processes Apache should be allowed to spin up.

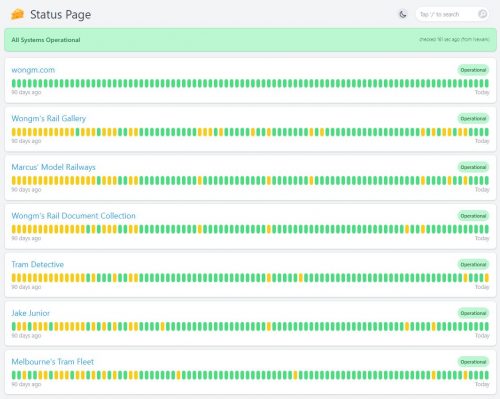

And since making these changes, the uptime of my websites has skyrocketed.

The status page found above is powered by the “Cloudflare Worker – Status Page” tool created by Adam Janiš.

Footnote: the ‘Slashdot effect’

Having your website taken down when a popular site links to you has been a thing for years – it’s called the ‘Slashdot effect‘ after one of the early social news websites of the 2000s – Slashdot.

Leave a Reply